Customizing a large language model to perform a specific task can provide a significant competitive advantage, but the process has traditionally been complex and expensive. The guide on how to fine tune open source llms with lora demystifies this process, offering a practical and efficient method. LoRA makes AI personalization accessible without requiring massive computational resources, opening the door for innovation across various applications.

Contents

What is lora and why use it for fine tuning

Why LoRA is a game changer for LLM tuning

Fine-tuning a full Large Language Model is resource-intensive. The process adjusts billions of parameters, demanding immense computational power that is too expensive for most. Parameter-Efficient Fine-Tuning (PEFT) methods were created to solve this problem. They provide an accessible path for customizing AI models without massive costs.

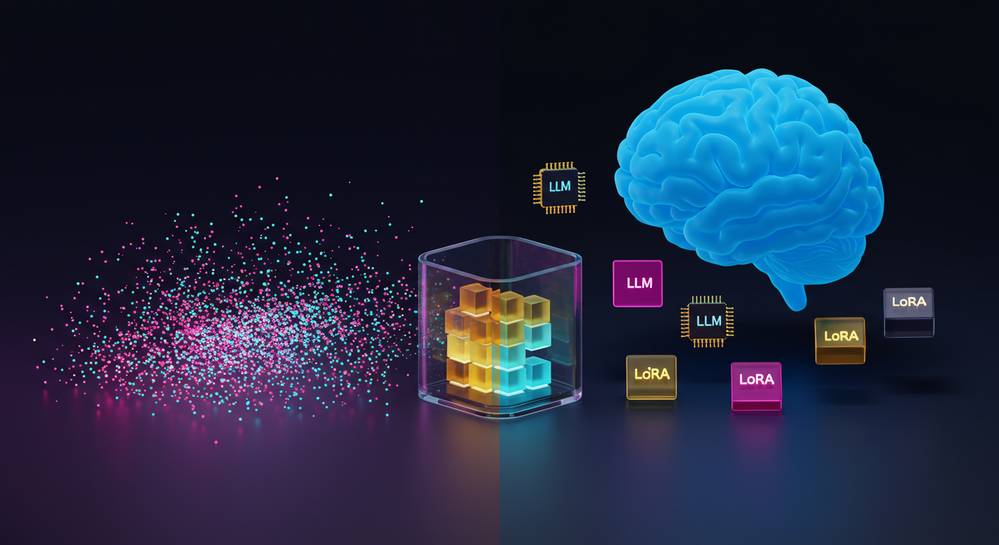

LoRA, or Low-Rank Adaptation, is a leading PEFT technique that has become standard practice. It works by freezing the LLM’s original weights. Then, it injects small, trainable matrices into the model. This means you only train these new, tiny layers, which is key to understanding how to fine tune open source llms with lora effectively.

- Lower computational cost: Training a fraction of the parameters significantly reduces GPU memory needs.

- Increased training speed: With fewer parameters to update, the training process is much faster.

- Portable and modular: The output is a small file of adapter weights, easy to share and apply to the base model.

- Knowledge retention: Freezing the original weights prevents the model from forgetting its vast pre-trained knowledge.

Preparing your environment and dataset

Before you begin the fine-tuning process, it is crucial to set up a suitable environment and prepare a high-quality dataset. The success of your fine-tuned model heavily depends on these preliminary steps. This preparation is a core part of learning how to fine tune open source llms with lora.

Essential tools and libraries

Your project will primarily rely on the Hugging Face ecosystem. You need to install several key Python libraries. A working knowledge of Python and a basic understanding of how AI works are beneficial. The core components include:

- PyTorch: The fundamental framework for building and training neural networks.

- Transformers: A Hugging Face library providing the pre-trained LLM you will use.

- Datasets: A library for efficiently loading and processing your training data.

- PEFT: The library that provides the LoRA implementation.

- TRL: A library with helpful tools like the SFTTrainer for supervised fine-tuning.

Choosing a base model and preparing data

Start by selecting an open-source LLM from the Hugging Face Hub. Models like Mistral 7B or Llama 2 7B are excellent starting points due to their strong performance and manageable size. Your dataset is the most critical element. It should be a collection of high-quality examples formatted for your specific task, such as JSONL for instruction fine-tuning. Ensure your data is clean, relevant, and diverse enough to teach the model the desired new behavior without introducing bias.

The step by step fine tuning process with lora

With your environment ready and dataset prepared, you can proceed with the actual fine-tuning. This process involves loading the model, configuring LoRA, and launching the training sequence using specialized tools from Hugging Face. Following these steps is the core of how to fine tune open source llms with lora.

Step 1: Load the base model and tokenizer

First, you need to load your chosen pre-trained model and its corresponding tokenizer from the Hugging Face Hub. It is recommended to load the model in a lower precision format like 4-bit. This technique drastically reduces the memory footprint, allowing you to fine-tune larger models on consumer-grade GPUs without sacrificing much performance.

Step 2: Configure lora parameters

Next, you create a LoraConfig object from the PEFT library. This is where you define how LoRA will be applied to your model. Key parameters include:

- r: The rank of the update matrices. A lower rank means fewer trainable parameters, with common values being 8, 16, or 32.

- lora_alpha: A scaling factor for the learned weights. A common practice is to set this to twice the rank.

- target_modules: A list specifying which parts of the model to apply the LoRA adapters to, such as attention blocks.

- task_type: The type of task you are fine-tuning for, such as CAUSAL_LM for text generation.

Step 3: Initiate and run the training

Finally, you combine the model, dataset, tokenizer, and LoRA configuration using the SFTTrainer. You will also define TrainingArguments to control aspects like learning rate and number of epochs. Once everything is set up, you call the trainer.train() method to start the fine-tuning process. After training completes, you can save the resulting adapter model, which creates a small directory containing only the trained LoRA weights.

Best practices and evaluating your fine tuned model

Successfully training a model is just one part of the equation. Following best practices during setup and properly evaluating the output are crucial for achieving high-quality results. This ensures your model performs as expected after you fine tune open source llms with lora.

Tips for achieving better results

The quality of your fine-tuned model depends heavily on your configuration and data. Pay close attention to hyperparameters like the learning rate, as an incorrect value can destabilize training. Experiment with different LoRA ranks to find the right balance between performance and parameter efficiency. Most importantly, remember that no amount of tuning can fix a poor-quality dataset. Ensure your data is clean, diverse, and accurately represents the task you want the model to perform.

Merging weights and running inference

After training, you have two main options for using your model. You can keep the base model and the LoRA adapter separate, loading the adapter on top each time you run inference. This approach is memory-efficient and flexible. Alternatively, for production environments where speed is critical, you can merge the LoRA weights directly into the base model. This creates a new, standalone model that performs identically but without the computational overhead from the adapter layers.

Using LoRA fundamentally changes the game for customizing large language models. It transforms an expensive, resource-heavy process into an accessible and efficient one, empowering more developers and researchers to build specialized AI solutions. By following the steps outlined, you can effectively adapt powerful open-source LLMs for your unique needs. To explore more insights on AI, technology, and Web3, visit Virtual Tech Vision for expert guides and analysis.