As technology continues to advance at an unprecedented rate, the use of artificial intelligence (AI) has become increasingly prevalent in many areas of our lives. From generative AI that can create any content using a simple prompt to smart home devices that learn our habits and preferences, AI has the potential to revolutionize the way we interact with technology.

However, as the amount of data we generate and share online grows exponentially, privacy concerns have become more pressing than ever before. Therefore, as a journalist for Virtual Tech Vision, I think it is important to explore the topic of privacy in the age of AI and delve into how AI impacts our personal data and privacy.

We will examine the potential benefits and risks associated with AI in regard to privacy and discuss what individuals, organizations, and governments can do to protect our personal data in this new age of technology.

Contents

Importance of Privacy in the Digital Era

In the digital era, personal data has become an incredibly valuable commodity. The vast amount of data generated and shared online daily has enabled businesses, governments, and organizations to gain new insights and make better decisions. However, this data also contains sensitive information that individuals may not want to share or organizations have used without their consent. That is where privacy comes in.

Privacy is the right to keep personal information confidential and free from unauthorized access. It is an essential human right that ensures individuals have control over their personal data and how it is used. Today, privacy is more important than ever as the amount of personal data collected and analyzed continues to grow.

Privacy is crucial for a variety of reasons. For one, it protects individuals from harm, such as identity theft or fraud. It also helps to maintain individual autonomy and control over personal information, which is essential for personal dignity and respect. Furthermore, privacy allows individuals to maintain their personal and professional relationships without fear of surveillance or interference. Last but not least, it protects our free will; if all our data is publicly available, toxic recommendation engines will be able to analyze our data and use it to manipulate individuals into making certain (buying) decisions.

In the context of AI, privacy is essential to ensure that AI systems are not used to manipulate individuals or discriminate against them based on their personal data. AI systems that rely on personal data to make decisions must be transparent and accountable to ensure that they are not making unfair or biased decisions.

The importance of privacy in the digital era cannot be overstated. It is a fundamental human right that is necessary for personal autonomy, protection, and fairness. As AI continues to become more prevalent in our lives, we must remain vigilant in protecting our privacy to ensure that technology is used ethically and responsibly.

Privacy Challenges in the Age of AI

AI presents a challenge to the privacy of individuals and organizations because of the complexity of the algorithms used in AI systems. As AI becomes more advanced, it can make decisions based on subtle patterns in data that are difficult for humans to discern. This means that individuals may not even be aware that their personal data is being used to make decisions that affect them.

The Issue of Violation of Privacy

While AI technology offers many potential benefits, there are also several significant challenges posed by its use. One of the primary challenges is the potential for AI to be used to violate privacy. AI systems require vast amounts of (personal) data, and if this data falls into the wrong hands, it can be used for nefarious purposes, such as identity theft or cyberbullying.

The Issue of Bias and Discrimination

Another challenge posed by AI technology is the potential for bias and discrimination. AI systems are only as unbiased as the data they are trained on; if that data is biased, the resulting system will be too. This can lead to discriminatory decisions that affect individuals based on factors such as race, gender, or socioeconomic status. It is essential to ensure that AI systems are trained on diverse data and regularly audited to prevent bias.

At first glance, the link between bias and discrimination in AI and privacy may not be immediately apparent. After all, privacy is often thought of as a separate issue related to the protection of personal information and the right to be left alone. However, the reality is that the two issues are intimately connected, and here’s why.

To start with, it is important to note that many AI systems rely on data to make decisions. This data can come from a variety of sources, such as online activity, social media posts, and public records. While this data may seem innocuous at first, it can reveal a lot about a person’s life, including their race, gender, religion, and political beliefs. As a result, if an AI system is biased or discriminatory, it can use this data to perpetuate these biases, leading to unfair or even harmful outcomes for individuals.

For example, imagine an AI system used by a hiring company to screen job applications. If the system is biased against women or people of color, it may use data about a candidate’s gender or race to unfairly exclude them from consideration. This harms the individual applicant and perpetuates systemic inequalities in the workforce.

The Issue of Job Displacements for Workers

A third challenge posed by AI technology is the potential for job loss and economic disruption. As AI systems become more advanced, they are increasingly capable of performing tasks that were previously done by humans. This can lead to job displacement, economic disruption in certain industries, and the need for individuals to retrain for new roles.

But the issue of job loss is also connected to privacy in a number of important ways. For one thing, the economic disruption caused by AI technology can lead to increased financial insecurity for workers. This, in turn, can lead to a situation where individuals are forced to sacrifice their privacy to make ends meet.

For example, imagine a worker has lost their job due to automation. They are struggling to pay their bills and make ends meet and are forced to turn to the gig economy to make money. In order to find work, they may be required to provide personal information to a platform, such as their location, work history, and ratings from previous clients. While this may be necessary to find work, it also raises serious concerns about privacy, as this data may be shared with third parties or used to target ads.

However, the issue of privacy and job loss is not just about the gig economy. It also relates to the ways in which AI technology is used in the hiring process. For example, some companies use AI algorithms to screen job applicants, analyzing their social media activity or online behavior to make decisions about their suitability for a particular role. This raises concerns about the accuracy of the data being used and questions about privacy, as job applicants may not be aware that this data is being collected and used in this way.

Ultimately, the issue of job loss and economic disruption caused by AI technology is closely tied to privacy because it can lead to situations where individuals are forced to sacrifice their privacy in order to survive in a changing economy.

The Issue of Data Abuse Practices

Finally, another significant challenge posed by AI technology is the potential for misuse by bad actors. AI can be used to create convincing fake images and videos, which can be used to spread misinformation or even manipulate public opinion. Additionally, AI can be used to create highly sophisticated phishing attacks, which can trick individuals into revealing sensitive information or clicking on malicious links.

The creation and dissemination of fake videos and images can have serious privacy implications. This is because these fabricated media often feature real people who may not have consented to their image being used in this way. This can lead to situations where individuals are harmed by the dissemination of fake media, either because it is used to spread false or damaging information about them or because it is used in a way that violates their privacy.

For example, consider a case in which an evil actor uses artificial intelligence to create a fake video showing a politician engaging in illegal or immoral behavior. Even if the video is clearly fake, it may still be shared widely on social media, leading to serious reputational harm for the politician in question. This not only violates their privacy but also has the potential to cause real-world harm.

The most recent AI technology presents many challenges that must be addressed to ensure that it is used ethically and responsibly. One reason why recent AI software has been associated with these challenges is that it often relies on machine learning algorithms, which are trained on large amounts of data. If that data contains biases, the algorithms will also be biased, leading to situations where AI perpetuates existing inequalities and discrimination. As AI continues to evolve, it is essential that we remain vigilant in addressing these challenges to ensure that AI is used for the greater good rather than for nefarious purposes that negatively affect our rights to privacy.

Underlying Privacy Issues in the Age of AI

In the age of AI, privacy has become an increasingly complex issue. With the vast amount of data being collected and analyzed by companies and governments, individuals’ private information is at greater risk than ever before.

Some of these issues include invasive surveillance, which can erode individual autonomy and exacerbate power imbalances, and unauthorized data collection, which can compromise sensitive personal information and leave individuals vulnerable to cyber attacks. These problems are often compounded by the power of BigTech companies, which have vast amounts of data at their disposal and significant influence over how that data is collected, analyzed, and used.

Let’s take a closer look at the implications of each of these problems.

The Power of Big Tech on Data

Big Tech companies have become some of the most powerful entities in the world, with enormous amounts of influence over the global economy and society as a whole. With the rise of AI and the coming shift to the metaverse, their power is only set to increase even further.

Today, Big Tech companies like Google, Amazon, and Meta have access to vast amounts of data, giving them unprecedented power to influence consumer behavior and shape the global economy. They are also increasingly involved in politics, as they have the ability to influence public opinion and shape government policy.

As we move towards the metaverse, where people will live, work, and interact in a virtual environment, Big Tech companies are likely to become even more powerful. The metaverse will generate the usage of data twenty times more than the internet today, creating even more opportunities for Big Tech companies to leverage their data and influence.

However, with this power comes great responsibility. Big Tech companies must be transparent about their data practices and ensure that the data they collect is used ethically and responsibly. They must also work to ensure that their platforms are inclusive and accessible to all rather than being controlled by a small group of powerful players.

The rise of Big Tech has given these companies unprecedented power, and their influence is only set to increase with the coming shift to the immersive internet. While this presents many exciting opportunities, Big Tech companies must take proactive measures to ensure that their power is used ethically and responsibly. By doing so, they can build a future where technology is used to benefit society as a whole rather than just a select few. Of course, it may be naive to think that Big Tech will do so voluntarily, so regulation will likely have to force Big Tech to take a different approach.

Data Collection and Use by AI Technologies

One of the most significant impacts of AI technology is the way it collects and uses data. AI systems are designed to learn and improve through the analysis of vast amounts of data. As a result, the amount of personal data collected by AI systems continues to grow, raising concerns about privacy and data protection.

More importantly, the use of personal data by AI systems is not always transparent. The algorithms used in AI systems can be complex, and it can be difficult for individuals to understand how their data is being used to make decisions that affect them. Lack of transparency can lead to distrust of AI systems and a feeling of unease.

To address these concerns, it is essential that organizations and companies that use AI technology take proactive measures to protect individuals’ privacy. This includes implementing strong data security protocols, ensuring that data is only used for the intended purpose, and designing AI systems that adhere to ethical principles.

Needless to say, transparency in the use of personal data by AI systems is critical. Individuals must be able to understand how their data is being used and have the ability to control the use of their data. This includes the ability to opt out of data collection and to request that their data be deleted.

By doing so, we can build a future where AI technologies are used to benefit society while protecting individuals’ privacy and data protection.

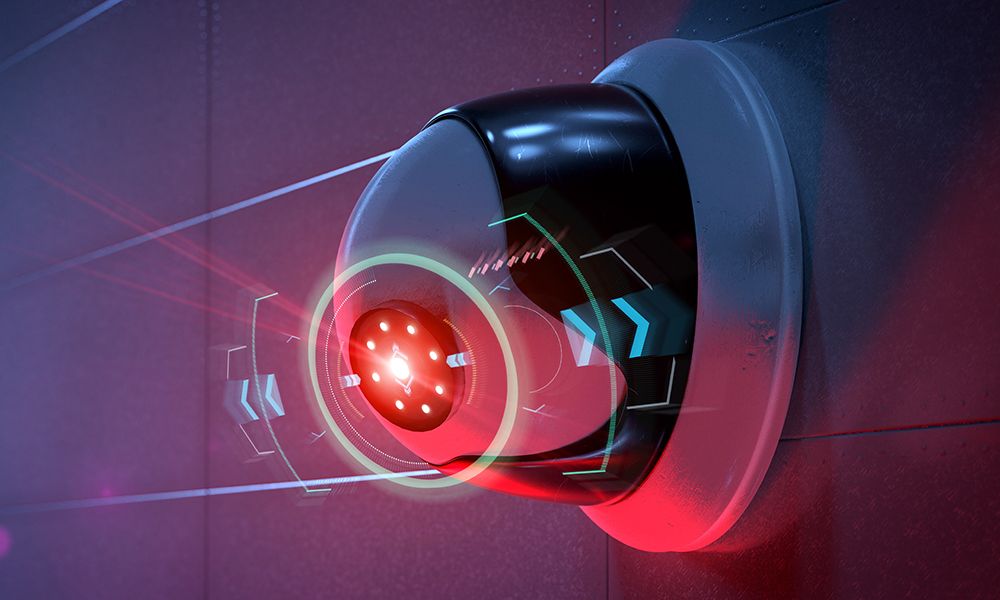

The Use of AI in Surveillance

One of the most controversial uses of AI technology is in the area of surveillance. AI-based surveillance systems have the potential to revolutionize law enforcement and security, but they also pose significant risks to privacy and civil liberties.

AI-based surveillance systems use algorithms to analyze vast amounts of data from a range of sources, including cameras, social media, and other online sources. This allows law enforcement and security agencies to monitor individuals and predict criminal activity before it occurs.

While the use of AI-based surveillance systems may seem like a valuable tool in the fight against crime and terrorism, it raises concerns about privacy and civil liberties. Critics argue that these systems can be used to monitor and control individuals, potentially losing freedom and civil liberties.

To make matters worse, the use of AI-based surveillance systems is not always transparent. It can be difficult for individuals to know when they are being monitored or for what purpose. This lack of transparency can erode trust in law enforcement and security agencies and create a sense of unease in the general public.

To address these concerns, the use of AI-based surveillance systems must be subject to strict regulation and oversight. This includes the development of clear policies and procedures for the use of these systems, as well as the establishment of independent oversight and review mechanisms.

Law enforcement and security agencies must be transparent about when and how these systems are used, and individuals must be able to access information about how their data is being collected and used. The integration of AI-based surveillance systems has undoubtedly brought significant advantages to law enforcement and security agencies. However, it is crucial to acknowledge these systems’ potential risks to our fundamental rights and freedoms. The lack of transparency and the potential for discrimination are just some of the concerns that must be addressed by regulatory bodies to ensure the protection of individual privacy and civil liberties.

Recently, The European Union (EU) Parliament has taken a significant step towards protecting individual privacy in the age of AI. A majority of the EU Parliament is now in favor of a proposal to ban the use of AI surveillance in public spaces. This proposal would prohibit the use of facial recognition and other forms of AI surveillance in public areas, except in cases where there is a specific public security threat. This decision reflects the growing concern about the potential for AI technology to be used in a way that infringes on individual privacy and other fundamental rights. By banning the use of AI surveillance in public, the EU Parliament is taking a strong stance toward ensuring that AI technology is developed and used in a way that respects individual privacy and other ethical considerations.

In my opinion, the use of AI technology in surveillance can only be justified if it is carried out in a responsible and ethical manner. By prioritizing individual privacy and civil liberties, we can build a future where AI technologies are harnessed to enhance security and protect society, without sacrificing the values that define us as a free and democratic society.

In the age of AI, our personal data is becoming increasingly valuable to organizations and businesses, and it is being used in ways that were once unimaginable. From facial recognition to predictive algorithms, AI is being used to collect, process, and analyze our personal information, often without our knowledge or consent.

For instance, generative AI, such as text and image generation tools, has become increasingly popular in recent years, enabling individuals to create content that mimics human-produced media. However, the use of generative AI raises significant privacy concerns, as companies that develop these tools may collect and analyze the data entered by users as prompts.

Users may enter a wide range of information as prompts, including personal information, images, and other sensitive data. This information can be used to train and improve the generative AI models, but it also raises questions about data security and privacy. Companies must ensure that they have adequate safeguards in place to protect this data, such as implementing robust data security measures and encryption protocols and complying with relevant privacy laws and regulations.

At the same time, users should be aware of the risks associated with sharing personal information when using generative AI tools. They should carefully consider what information they enter as prompts and be aware of the data protection policies and practices of the companies that develop these tools.

Ultimately, it is important that both companies and individuals take steps to ensure that privacy is protected in the age of generative AI so that the benefits of these technologies can be realized in a safe and responsible way.

In the next section, we’ll take a closer look at other pressing examples of privacy concerns in the age of AI and discuss their potential impact on individuals and society as a whole.

CASE 1. Google’s Location Tracking

Due to privacy concerns, Google’s location-tracking practices have come under intense scrutiny in recent years. The company tracks the location of its users, even when they have not given explicit permission for their location to be shared. This revelation came to light in 2018 when an Associated Press investigation found that Google services continued to store location data, even when users turned off location tracking. This was a clear breach of user trust and privacy, and Google faced significant backlash from users and privacy advocates.

Since 2018, Google has changed its location tracking policies and improved transparency regarding how it collects and uses location data. However, concerns remain regarding the extent of data collected, how it is used, and who has access to it. As one of the world’s largest tech companies, Google’s actions have far-reaching implications for individuals and society at large.

One of the biggest issues with Google’s location tracking practices is the potential for the misuse of personal data. Location data is incredibly sensitive, and if it falls into the wrong hands, it can be used to track individuals’ movements, monitor their behavior, and even be used for criminal activities. The implications of location data being leaked or hacked can be dire, and it is essential for companies like Google to ensure that they have robust security measures in place to protect user data. Also, there is the issue of third-party access to user data, which can be used for advertising purposes or even sold to other companies for profit.

CASE 2. AI-Powered Recommendations: My Personal Experience with Google’s Suggestion Engine

An example of privacy concerns in the age of AI is the invasive nature of Big Tech companies. I recently shared a personal experience I had about watching a show on Amazon Prime on Apple TV. Two days after finishing the show, I received news recommendations related to the show on a Google app on an iPhone, while I never watched that show on my iPhone. An alarming practice and it begs the question: does Google have full access to all of our apps and activities?

As someone who has been working with big data for over a decade, I know it is technically possible, but it is concerning that it is allowed. For this level of personalized recommendation to be made, Google would need to access information from other apps on the iPad (even with my privacy settings preventing this practice) or eavesdropping on my conversations using the microphone of my iPhone or iPad and connect it to the my Google account. Both are not allowed and are a massive breach of privacy.

The example of Google’s suggestive algorithm highlights the significant privacy concerns in the age of AI. The fact that Google is able to make personalized recommendations based on seemingly unrelated activities raises questions about the company’s access to our private data. While this level of personalization is technically possible, it is important to consider the ethical implications of such practices. As we continue relying more on AI and big data, it is critical to ensure privacy is respected and protected. It is vital that companies and policymakers take the necessary steps to establish clear guidelines and regulations to ensure that AI technology is developed and used in a way that upholds fundamental human rights and values.

CASE 3. The Use of AI in Law Enforcement

One example of the use of AI in law enforcement is the deployment of predictive policing software. This software uses data analysis and machine learning algorithms to predict where crimes are most likely to occur and who is most likely to commit them. While this technology may sound promising, it has come under scrutiny for perpetuating biases and reinforcing existing prejudices. For example, some predictive policing systems have been found to unfairly target minority communities, leading to allegations of racial profiling and discrimination.

Another example of the use of AI in law enforcement is facial recognition technology. This technology uses algorithms to match images of people’s faces to a database of known individuals, allowing law enforcement to identify and track individuals in real time. While facial recognition technology has the potential to help law enforcement solve crimes, it also raises concerns about privacy and civil liberties. In some cases, facial recognition systems have been found to misidentify individuals, leading to false accusations and wrongful arrests.

As law enforcement agencies integrate AI technologies, there is a risk that these systems may perpetuate and even exacerbate existing societal biases and injustices. Also, the use of AI in law enforcement raises questions about transparency and accountability. It can be difficult to understand how these systems operate and make decisions, making it crucial to develop regulations and oversight mechanisms to ensure that the use of AI is transparent, ethical, and respects individual rights and freedoms.

CASE 4. The Use of AI in Hiring and Recruitment

The use of AI in hiring and recruitment has become increasingly popular in recent years. Companies are turning to AI-powered tools to screen and select job candidates, citing benefits such as increased efficiency and objectivity. However, these tools can also raise significant concerns about fairness and bias. One notable example is the case of Amazon’s AI-powered recruiting tool, which was found to discriminate against women because the system was trained on resumes from mostly male candidates.

This highlights the potential for AI to perpetuate existing biases and discrimination, and the need for careful consideration and testing of these tools to ensure they are not inadvertently perpetuating unfair practices. As the use of AI in hiring and recruitment continues to grow, it is crucial that we prioritize transparency and accountability to prevent discrimination and ensure fairness in the workplace.

Solutions to Overcome These Challenges

As we continue to integrate AI into various aspects of our lives, it is clear that privacy and ethical considerations are becoming increasingly important. The potential benefits of AI are vast, but so are the risks associated with its use. As a society, we must take a proactive approach to address these challenges to protect individual privacy and ensure that AI is used ethically and responsibly.

Organizations and companies that use AI must prioritize privacy and ethical considerations in their AI systems’ design and implementation. This includes being transparent about data collection and usage, ensuring data security, regularly auditing for bias and discrimination, and designing AI systems that adhere to ethical principles. Companies that prioritize these considerations are more likely to build trust with their customers, avoid reputational damage, and build stronger relationships with their stakeholders.

As AI continues to advance and transform the world, it is crucial that we do not lose sight of the importance of privacy and ethical considerations. By prioritizing privacy and adopting strong data protection policies, we can help ensure that AI technology is developed and used in a way that respects individual privacy and other ethical considerations.

Privacy is a fundamental human right, and as AI technology continues to advance, it is critical that we prioritize privacy and ensure that individuals’ rights are protected. This requires a multifaceted approach that involves the cooperation of governments, organizations, and individuals. Governments should implement regulations to ensure that AI is developed and used in a way that respects individual privacy and other ethical considerations. Organizations should prioritize privacy as a core value and adopt strong data protection policies that respect individual privacy.

Finally, individuals should be empowered with transparency and control over their personal data. By prioritizing privacy and adopting strong data protection policies, we can help ensure that AI technology is developed and used in a way that is both effective and privacy-respecting, ultimately leading to a future where individuals can benefit from the transformative power of AI without sacrificing their fundamental right to privacy.

Global Approaches to Protecting Privacy in the Age of AI

The issue of AI and privacy is a global concern, and countries around the world have taken various measures to protect their citizens’ privacy. In the USA, the California Consumer Privacy Act (CCPA) is the most comprehensive privacy law, giving Californians the right to know what personal information companies collect and request deletion. The US government has also introduced several bills, such as the Consumer Online Privacy Rights Act (COPRA) and the SAFE DATA Act.

In Europe, the General Data Protection Regulation (GDPR) is the most significant privacy regulation, setting a global standard for privacy regulations. It provides a set of rules to protect the personal data of EU citizens and applies to all companies operating within the EU. For example, in 2020, the French data protection regulator fined Google 50 million euros for violating the GDPR. The European Union has also proposed a new regulation called the Digital Services Act, which aims to strengthen online privacy and give users more control over their data.

China has implemented several measures to protect citizens’ privacy, including the Cybersecurity Law, which requires companies to protect personal information and gives citizens the right to know how their data is being used. However, the Chinese government has been criticized for using AI to monitor citizens’ activities and suppress dissent. In 2020, the National People’s Congress passed a new personal information protection law, which took effect in November 2021. The new law imposes stricter rules on companies collecting and processing personal information and introduces penalties for violations.

Australia has enacted laws such as the Privacy Act 1988, which regulates the handling of personal information by government agencies and private organizations and gives citizens the right to access and correct their personal information. However, critics argue that the Privacy Act is outdated and needs to be updated to address emerging privacy concerns posed by AI. In fact, in late 2022, the Australian government released a discussion paper outlining proposed reforms to the Privacy Act, including stronger penalties for breaches and a requirement for companies to conduct privacy impact assessments.

Many other countries are taking different approaches to protecting their citizens’ privacy in the age of AI, and the development of privacy laws is an ongoing process with changes and updates likely to happen in the future.

While the responsibility of protecting privacy falls on many parties, including governments, companies, and individuals, it is essential for consumers to take an active role in protecting their personal information. By staying informed, utilizing privacy tools and settings, and being mindful of their online activities, consumers can help safeguard their privacy in the age of AI.

The Future of Privacy in the Age of AI

As AI technologies continue to advance and become more integrated into our daily lives, the future of privacy is at a critical crossroads. With the rise of the metaverse and the increasing amount of data we generate, it is essential that we begin to consider the future implications of these technologies for the security and privacy of our data.

The decisions we make today will have far-reaching consequences for future generations, and it is up to us to ensure that we build a future where AI technologies are used in a way that benefits society as a whole while also respecting and protecting individual rights and freedoms. In this section, we’ll explore some of the potential opportunities for privacy in the age of AI and what steps we can take to shape a more positive future.

The Need for Regulation

As AI systems become more sophisticated and are able to process and analyze vast amounts of data, the potential for misuse and abuse of this technology grows.

To ensure that AI technology is developed and used in a way that respects individual rights and freedoms, it is essential that it be subject to effective regulation and oversight. This includes not only the collection and use of data by AI systems but also the design and development of these systems to ensure that they are transparent, explainable, and unbiased.

Effective regulation of AI technology will require collaboration between governments, industry, and civil society to establish clear standards and guidelines for the ethical use of AI. This will also require ongoing monitoring and enforcement to ensure these standards are upheld.

Without proper regulation, there is a risk that the increasing use of AI technology will lead to further erosion of privacy and civil liberties, as well as exacerbating existing inequalities and biases in society. By establishing a regulatory framework for AI, we can help ensure that this powerful technology is used for the greater good while protecting individual rights and freedoms.

The Importance of Data Security and Encryption

Data breaches and cyber-attacks can have severe consequences, such as identity theft, financial loss, and reputational damage. In recent years, several high-profile data breaches have highlighted the importance of data security, and the use of encryption to protect sensitive information has become increasingly important.

Encryption is the process of converting information into an unreadable format to prevent unauthorized access. It provides a way to protect data both in storage and during transmission. Encryption is essential for protecting sensitive data, such as personal information, financial data, and trade secrets. As AI technology advances, the need for robust data security and encryption becomes even more critical. The vast amount of data that AI relies on means that any breach can have far-reaching consequences, making it essential to implement security measures to safeguard against data loss or theft.

For example, consider a healthcare organization that uses AI technologies to analyze patient data. This data may include sensitive information such as medical histories, diagnoses, and treatment plans. If this data were to be stolen or accessed by unauthorized parties, it could have serious consequences for the patients involved. By using strong encryption to protect this data, the healthcare organization can ensure that it remains confidential and secure.

Another example is a financial institution that uses AI to analyze customer data for fraud detection. The data collected by the institution may include personal and financial information, such as account numbers and transaction histories. If this data were to fall into the wrong hands, it could be used for identity theft or other fraudulent activities. By using encryption to protect this data, the financial institution can prevent unauthorized access and keep its customers’ information safe.

Both of these examples make the importance of data security and encryption clear. Organizations that use AI must take data security seriously and implement robust encryption measures to protect the sensitive data they collect. Failure to do so could result in serious consequences for both the organization and the individuals whose data has been compromised.

The Correlation with Quantum Computing

The rise of quantum computing poses a significant threat to data security and encryption and underscores the need for increased investment in advanced encryption techniques.

Quantum computers can break traditional encryption algorithms currently used to secure sensitive data, such as financial transactions, medical records, and personal information. This is because quantum computers can perform calculations much faster than classical computers, allowing them to crack encryption keys and reveal the underlying data.

To address this threat, researchers and industry experts are developing new encryption techniques that are specifically designed to resist quantum computing attacks. These include post-quantum cryptography, which uses mathematical problems that are believed to be resistant to quantum computers, and quantum key distribution, which enables the secure exchange of cryptographic keys over long distances.

As the development of quantum computing technology continues, it is essential that organizations and governments take steps to ensure the security of their sensitive data. This includes investing in advanced encryption techniques specifically designed to resist quantum computing attacks and implementing robust data security measures to prevent unauthorized access and data breaches.

The Role of Consumers in Protecting their Privacy

Protecting our privacy is more important than ever. While regulations and data security measures can provide some level of protection, individuals also play a vital role in protecting their own privacy. Consumers can take several steps to safeguard their personal information.

First, it is essential to understand what data is being collected and how it is being used. This information can usually be found in privacy policies and terms of service agreements. Consumers should take the time to read and understand these documents before using any products or services that collect their data.

Second, individuals can take advantage of privacy tools and settings that are often available within software and social media platforms. For example, many websites offer the option to opt out of targeted advertising or limit data sharing with third-party companies. Similarly, social media platforms often provide privacy settings to control who can view or access personal information.

Lastly, consumers should be mindful of their online activities and the information they choose to share. Social media posts, online purchases, and even simple web searches can reveal personal information that could be used to compromise privacy. Being aware of the information that is being shared and taking steps to limit its dissemination can go a long way